Key Takeaways

- Intel CEO Pat Gelsinger predicts quantum computing (QC) will displace GPU-based AI infrastructure by the end of the decade.

- Recent breakthroughs by Google and Quantinuum prove fault-tolerant QC is accelerating faster than expected, achieving 800x lower error rates.

- The LoadSyn community is highly skeptical, demanding proof of immediate, tangible utility before accepting the premise of GPU displacement.

- QC remains highly niche, excelling only at specific optimization and simulation tasks, while classical GPUs dominate general-purpose computing.

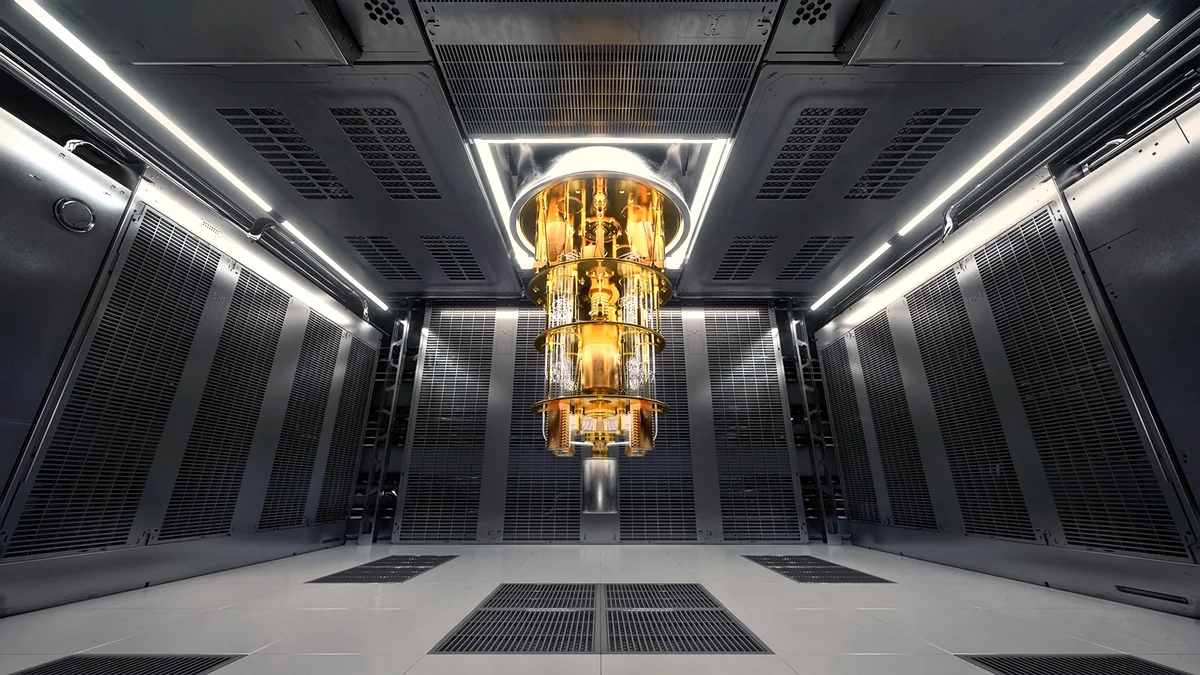

The AI gold rush is built on silicon. Specifically, the massive, parallel processing power of NVIDIA GPUs, which have become the trillion-dollar foundation of the current technological boom. But what if that foundation has an expiration date? Intel CEO Pat Gelsinger recently threw down a gauntlet, predicting that quantum computing—the ‘holy trinity’ of computing—will go mainstream far faster than consensus suggests, displacing GPU dominance by 2030. It’s a bold claim that challenges the very roadmap of the PC hardware industry. We dive into the science behind the prediction, and why the gaming community is rolling its eyes.

Gelsinger predicts QC will go mainstream much faster than industry consensus (2 years vs. 2 decades). QC will displace GPUs by the end of the decade. QC is the ‘holy trinity’ of computing alongside classical and AI.

The Quantum Gauntlet: The Breakthroughs That Are Shifting the Timeline

Gelsinger’s timeline isn’t pulled from thin air; it’s based on a rapid acceleration in fault-tolerant quantum computing (FTQC). For years, QC was held back by fragile qubits and massive error rates. That is changing. Quantinuum and Microsoft recently achieved a crucial Level 2 – Resilient phase, demonstrating logical circuit error rates 800 times lower than physical rates. Simultaneously, Google’s Willow chip showed an exponential reduction in errors as qubit count scaled, a feat experts called a ‘very important milestone.’ These advancements prove that the core engineering hurdle—qubit stability—is being solved much faster than the conservative two-decade estimates.

The Fandom Pulse: Why Gamers Are Calling ‘Vaporware’

Despite the technical milestones, the LoadSyn community remains deeply skeptical. Our Fandom Pulse analysis shows widespread fatigue with ‘breakthroughs’ that have no tangible, real-world utility. For the average PC gamer, the question isn’t whether a quantum computer can solve a specific, difficult-to-verify mathematical problem; the question is: can it improve my frame rates or run the next generation of demanding software? Until QC can demonstrate clear, immediate advantages in general-purpose computing, the consensus remains that it’s niche hype.

The Quantum Utility Bias

The most significant hurdle for QC adoption isn’t technical; it’s utility. For a technology to displace GPUs, it must be exponentially better at the tasks GPUs perform: rendering graphics, running physics engines, and general AI model training. Currently, QC is only proven superior for specific optimization and simulation tasks. It cannot, yet, run Crysis.

Classical GPUs vs. Quantum Computing: Application Scope

| Criteria | Classical Computing (GPUs) | Quantum Computing (QC) |

|---|---|---|

| Core Architecture | Transistors (Bits: 0 or 1) | Qubits (Superposition: 0, 1, and both) |

| Primary Function | General-purpose tasks, rendering, large-scale data processing, deep learning. | Highly specific optimization, complex simulation (chemistry, materials science), factorization. |

| Current Utility for Gaming | Essential (Ray Tracing, AI Upscaling, Physics) | Zero (No known practical application yet) |

| Key Limitation | Moore’s Law scaling limits, energy consumption. | Qubit fragility, required near-absolute zero temperature (mostly). |

The Hybrid Future: How QC Might Enter the Gaming Ecosystem

Closing the QC Gap: Technical Accelerants

- Room Temperature Operation: Research is advancing atom-thin transducers that could eliminate the need for near-absolute zero cooling, massively reducing cost and footprint.

- Hybrid Supercomputing: Companies like Quantinuum are integrating QC hardware with NVIDIA’s Grace Blackwell platforms, using AI to optimize quantum algorithms for current and future constraints.

- Post-Quantum Cryptography (PQC): The immediate, unavoidable threat of QC breaking current encryption is forcing governments and finance (NSA, NIST) to transition, which will drive massive investment and standardization of quantum-safe systems.

The 2030 Deadline: A Strategic Warning, Not a Certainty

Gelsinger’s prediction is less a technical guarantee and more a strategic warning: the timeline for QC is accelerating, and the industry must prepare for a fundamentally different computing paradigm. While QC will not replace your RTX 5090 for rendering Cyberpunk 2077 in 2030, it will likely be integrated into cloud-based AI services, solving the most complex optimization problems (like game physics or massive world generation) that current GPUs struggle with. The GPU is safe for general gaming for the foreseeable future, but the AI infrastructure built upon it is vulnerable to a quantum leap.

Frequently Asked Questions

Will quantum computers ever be used for graphics rendering or gaming?

Not directly. Classical GPUs are optimized for the parallel linear algebra required for rendering. QC is optimized for exponential speedups in specific, non-linear problems (optimization, simulation). It is far more likely that QC will be used to design better chips or generate massive, complex game worlds via AI, which are then rendered by classical GPUs.

What is the biggest immediate risk of quantum computing?

The biggest immediate risk is the potential to break current public-key encryption (RSA, ECC), which secures global finance and commerce. This is why the transition to Post-Quantum Cryptography (PQC) is a mandatory, near-term focus for governments and tech companies.