The memory market is in turmoil, and for PC gamers, the news isn’t good. At the heart of this disruption is the rise of SOCAMM2, a groundbreaking memory architecture that’s a marvel of engineering for AI data centers and edge computing. While SOCAMM2 delivers unparalleled power efficiency, density, and modularity for servers, its rapid adoption is a primary driver behind the severe RAM price hikes currently plaguing the consumer market.

This unprecedented memory crunch, fueled by the insatiable demand for high-performance AI memory, is projected to keep prices elevated well into 2026, with only potential stabilization or single-digit increases expected in 2027. For gamers, this means the era of cheap, abundant memory is over for the foreseeable future, making strategic purchasing and careful planning more vital than ever.

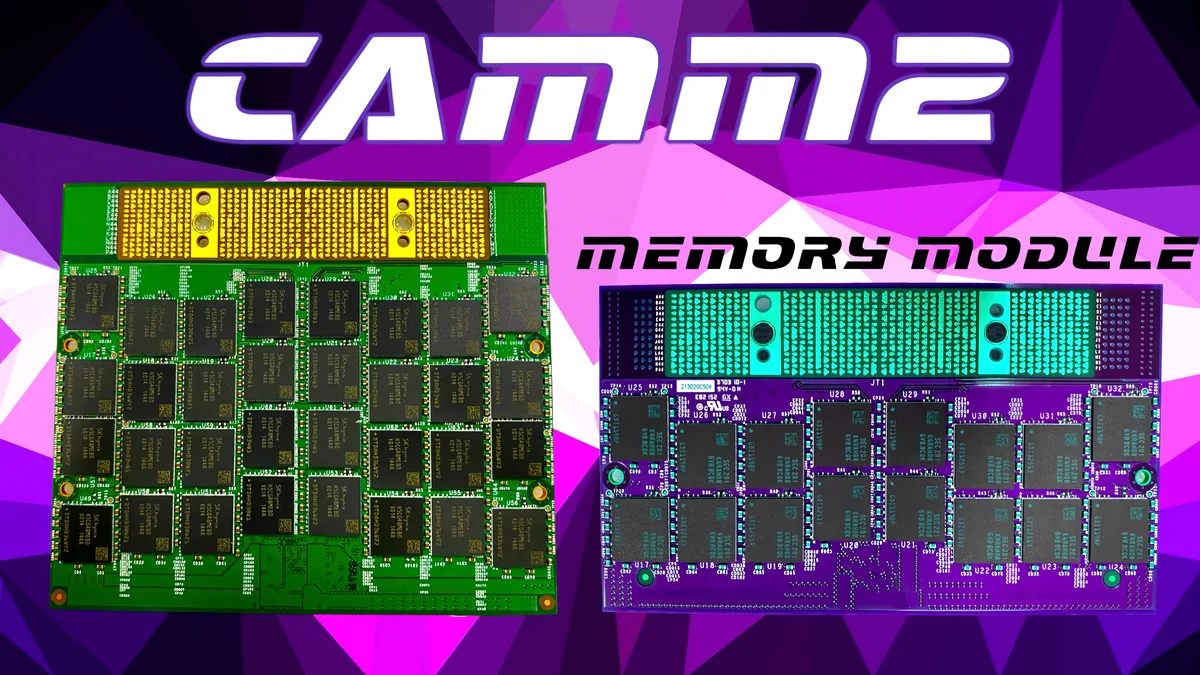

SOCAMM2 & CAMM2: Unsung Heroes Reshaping Server Memory (and Your Wallet)

You might not have heard much about SOCAMM2 or its broader standard, CAMM2, but these are innovations set to redefine memory architecture. Initially conceived by Dell and later standardized by JEDEC, CAMM (Compression Attached Memory Module) was designed as a modular LPDDR5X solution to address the unique challenges of thin and light laptops. Its promise was clear: significant space savings, superior power efficiency, and crucially, improved serviceability compared to soldered LPDDR.

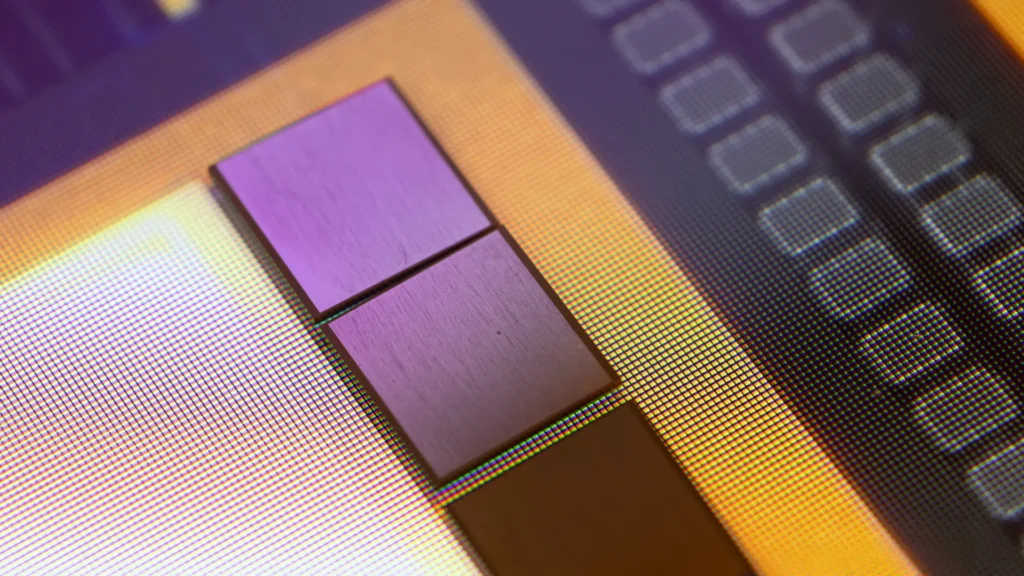

Fast forward, and this modular LPDDR5X technology, now often referred to as SOCAMM (Small Outline Compression Attached Memory Module) in the server space, has evolved far beyond its laptop origins. Developed by NVIDIA in partnership with industry giants like Micron, Samsung, and SK Hynix, SOCAMM2 is rapidly becoming a critical memory architecture for the demanding world of AI data centers and edge computing. It’s purpose-built to bridge performance and efficiency gaps, combining LPDDR5X’s high bandwidth and energy efficiency with a modular, socketed form factor that allows for unprecedented flexibility and scalability in AI-centric platforms. This shift, however, has profound, albeit indirect, implications for every PC builder and gamer.

Technical Specifications: SOCAMM2 (LPDDR5X)

Memory Standard Comparison

| Metric | SOCAMM (LPDDR5X) | HBM3E/4 | GDDR7 | DDR5 RDIMM |

|---|---|---|---|---|

| Form Factor | 14×90 mm, socketed | Co-packaged | On-board BGA | DIMM (long) |

| Capacity/Module | Up to 128 GB | ~36 GB/stack | Up to 32 GB | Up to 256 GB+ |

| Peak Bandwidth | ~100–150 GB/s | 0.8–1.2+ TB/s | ~150–200 GB/s | ~50–80 GB/s |

| Data Rate | 6400–8533 MT/s | Very high | 28–32+ Gbps | 4800–6400 MT/s |

| Power Efficiency | Excellent | Good–Moderate | Good | Moderate–Poor |

| Latency (ns) | ~30–45 | ~5–10 | ~10–20 | ~70–90 |

| Modularity | High | None | None | High |

| Cost/GB | Moderate | Very High | Moderate–High | Low–Moderate |

| SOCAMM occupies a unique and highly advantageous position for AI inference workloads. Its modularity and LPDDR-class power efficiency make it ideal for scaling AI at the edge and in compact server environments, striking a balance between the extreme bandwidth of HBM and the traditional flexibility of RDIMMs. | ||||

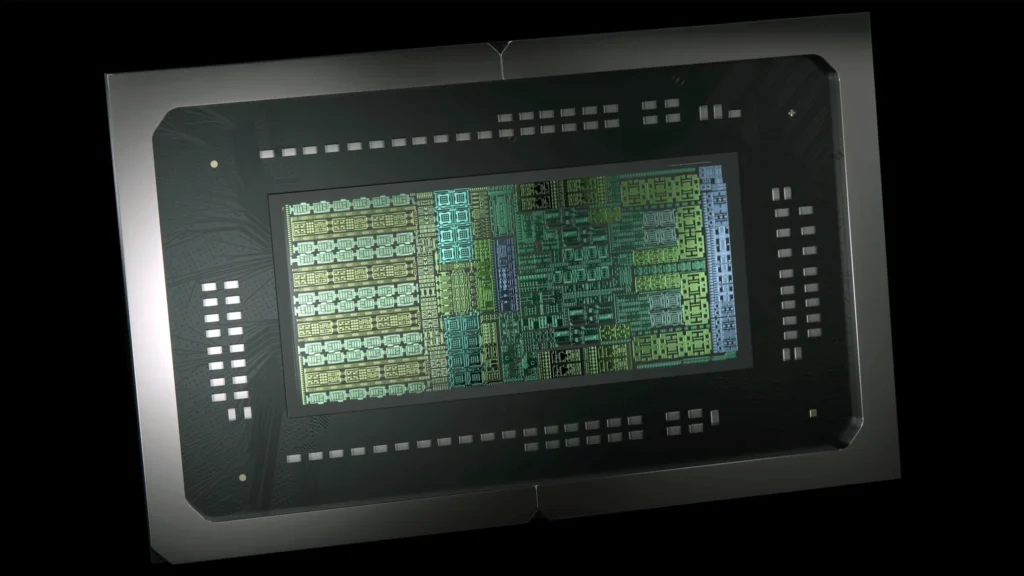

The AI Gold Rush: Why Your RAM Prices Are Skyrocketing

The memory industry is currently experiencing what Micron’s Executive Vice President of Operations, Manish Bhatia, calls an “unprecedented” memory chip shortage. This isn’t just a bump in the road; it’s a fundamental market shift driven by the explosive growth of AI. Hyperscale data centers, powering everything from large language models to complex AI inference, are demanding vast quantities of high-performance memory, particularly High-Bandwidth Memory (HBM) and enterprise-grade DDR5/SOCAMM2. Platforms like Nvidia’s Grace CPU Superchip and Grace-Hopper architectures are at the forefront of this demand, requiring memory solutions that can keep their powerful processors fed with data at incredible speeds.

In response to this insatiable appetite, major memory manufacturers like Samsung, Micron, and SK Hynix have strategically redirected significant portions of their production capacity. Why? Because the margins on these specialized AI memory chips are substantially higher. While this is a boon for their bottom line, it creates a severe scarcity of conventional DRAM, the very memory that powers your gaming PC. Every wafer allocated to an HBM stack or a SOCAMM2 module for an AI server is a wafer denied to the consumer market, leading to a tightening supply and, inevitably, soaring prices for the RAM you need.

- Explosive Growth of AI Data Centers: The primary driver is the massive, ongoing build-out of AI infrastructure, requiring vast quantities of high-performance HBM and enterprise-grade DDR5/SOCAMM2 memory.

- Manufacturer Prioritization: Key memory producers are actively reallocating production capacity to higher-margin AI memory, leaving less for the consumer market.

- HBM’s Wafer Footprint: HBM production consumes up to three times more silicon wafer capacity than standard DRAM, exacerbating supply constraints.

- Concentrated Supply: With only a handful of major manufacturers globally, shifts in priorities have immediate and severe ripple effects.

- Supply Chain Disruptions: Long lead times required to bring new fabrication capacity online further restrict the ability to meet surging demand.

- Recovering Consumer Demand: As consumer demand for PCs recovers, it adds further pressure to an already strained memory supply.

Gamers’ Grief: The High Cost of High Performance

“The corporates need a middle finger right now. Should be given to them soon.”

The sentiment above perfectly encapsulates the anger and frustration boiling within the PC gaming community. Many feel a profound sense of helplessness, believing that memory manufacturers are engaging in price gouging, leveraging the AI boom to artificially inflate costs. This isn’t just about minor increases; we’ve seen popular 32GB DDR5 kits, which once sold for under $100, now retailing closer to $350—a quadrupling in price in just a few months. This drastic hike has led to widespread regret among those who delayed their upgrades, now staring down prohibitive costs.

The Road Ahead: When Will RAM Prices Stabilize?

Predicting market trends is always challenging, but the consensus among industry analysts is clear: elevated memory prices are here to stay for the foreseeable future. Forecasts suggest that prices will persist well into 2026, with some reports indicating that further increases of 20-40% could occur in early 2026 if capacity remains constrained. While there’s a glimmer of hope for stabilization, or perhaps even single-digit increases, in 2027, a significant price correction isn’t anticipated before then.

The Memory Landscape for Gamers: A Double-Edged Sword

The Pros

- Future System Efficiency: Modular CAMM2 tech could trickle down to high-end consumer devices for thinner, efficient builds.

- Optimized Performance: AI-driven memory innovations will eventually offer higher performance peaks for consumers.

The Cons

- Significantly Higher Costs: Skyrocketing RAM costs make high-performance gaming increasingly expensive.

- Limited Upgradeability: More soldered LPDDR in laptops means fewer future memory upgrade paths.

- Reduced Availability: Consumer DRAM is becoming scarcer as manufacturers prioritize AI components.

- Long-Term Affordability: Sustained high prices raise questions about the long-term viability of the hobby.

Your Burning RAM Questions, Answered

Will RAM prices ever go down?

Is AI really to blame for high RAM prices?

Should I buy DDR4 or wait for DDR5/6?

What is SOCAMM2 and why should I care if I’m a gamer?

Final Verdict

The narrative around memory has fundamentally shifted. SOCAMM2 and the surge in AI demand represent an undeniable technological leap, but this innovation comes at a steep cost for the consumer. The era of cheap, abundant memory for PC gamers is, for the foreseeable future, over. Silicon wafer capacity is now a strategic asset reallocated towards high-margin AI components. Adapting to this era will require strategic planning and a clear understanding that RAM is no longer a commodity, but a costly bottleneck.