The notion of data centers floating among the stars, powered by an endless sun and cooled by the vacuum of space, has long been a staple of science fiction. Today, fueled by the insatiable demand of artificial intelligence workloads and the ambitious pronouncements from tech giants, this vision is rapidly migrating from the realm of fantasy to the drawing boards of aerospace engineers. But is this truly a groundbreaking solution to Earth’s escalating data demands, or merely a monumental engineering fantasy destined to crash and burn? At LoadSyn, we cut through the hype to explore the hard science, dissecting whether the allure of orbital computing can withstand the cold, hard realities of physics, engineering, and economics.

Key Takeaways: Orbital Data Centers – Dream vs. Reality

- The Allure: Driven by AI’s massive energy demands, terrestrial limitations, and the promise of limitless solar power and natural cooling in space.

- The Hard Truths: Face immense engineering hurdles in power generation, extreme thermal management, radiation hardening for consumer-grade hardware, limited bandwidth, and nearly impossible in-situ maintenance.

- Current Players: Companies like Lonestar Data Holdings (lunar storage), Axiom Space (ISS nodes), Google (Project Suncatcher with radiation-hardened TPUs), and SpaceX/Starcloud (distributed LEO compute) are actively pursuing different approaches.

- Economic & Community Concerns: Currently astronomically expensive with questionable ROI compared to terrestrial options. Community skepticism revolves around regulatory evasion, environmental impact (space debris), and fundamental practicality.

- The Future: Likely a hybrid model where orbital solutions complement terrestrial ones, serving specialized ‘edge’ cases for space-based operations, disaster recovery, and data sovereignty rather than replacing hyperscale Earth data centers.

The Allure of the Orbital Cloud: Why Tech Giants Look Beyond Earth

Terrestrial data centers are hitting a wall. The relentless march of AI, demanding ever-more computational power, has pushed energy consumption to unprecedented levels. This surge exacerbates an already critical situation where land and water resources for new facilities are becoming increasingly scarce, leading to growing community pushback against their noise, resource strain, and environmental impact. It’s a complex web of problems that leaves tech giants searching for a ‘blank slate’ – and space, seemingly, offers just that. The perceived benefits are compelling: boundless solar energy, inherent cooling in the vacuum of space, enhanced physical security from terrestrial threats, and the tantalizing prospect of data sovereignty beyond national jurisdictions.

Key Proposed Benefits of Orbital Data Centers:

- Unlimited Solar Energy: Constant exposure to sunlight promises greener, more reliable power than Earth-based grids.

- Natural Radiative Cooling: The vacuum of space eliminates convection, offering a seemingly ‘free’ cooling environment.

- Enhanced Physical Security: Isolation from terrestrial threats like natural disasters, geopolitical conflicts, and physical breaches.

- Reduced Latency for Space-Based Apps: Processing data closer to its source (e.g., Earth observation satellites) eliminates transmission delays.

- Data Sovereignty: Potential to operate under international space law, offering a neutral ground for sensitive data beyond national borders.

Google CEO Sundar Pichai articulated this ‘moonshot’ ambition with Project Suncatcher, stating, “One of our big moonshots is figuring out how we can one day have data centers in space so that we can better harness the energy from the sun, which is 100 trillion times greater than what we produce on Earth today.”

Engineering’s Final Frontier: The Gauntlet of Orbital Computing

While the vision of off-world computing is compelling, the practicalities of operating complex, power-hungry hardware in the unforgiving vacuum of space introduce monumental engineering hurdles. These challenges often defy intuitive solutions, demanding a meticulous, physics-first approach. In this section, we’ll systematically deconstruct why making a server farm function reliably 300,000 kilometers from home is far more complicated than simply strapping a GPU to a rocket.

Powering the Stars: The Solar Mirage

The promise of ‘unlimited solar energy’ in space is often cited, yet the reality is far from a magic bullet. While solar panels in orbit do receive constant sunlight, their actual power output per unit area isn’t magically superior to terrestrial panels. The challenge lies in the sheer scale required. Deploying massive solar arrays capable of powering modern AI workloads – like the International Space Station’s (ISS) 200kW system, which spans over half a football field – is an incredibly complex and expensive undertaking. When you consider that a single NVIDIA H200 GPU can draw around 0.7kW (or 1kW with overhead), the scale mismatch becomes stark. To power just a fraction of a modern hyperscale AI data center, you’d need an astronomical number of these colossal space-based arrays.

Power Scale: ISS vs. Modern AI Compute

| Category | Specification | Value |

|---|---|---|

| International Space Station (ISS) | ||

| Peak Power Output | 200 kW | |

| Solar Array Area | 2,500 m² (over half a football field) | |

| Modern AI Compute (NVIDIA H200 GPU) | ||

| Typical Power Draw per GPU | ~0.7 kW (or 1kW with overhead) | |

| GPUs per Ground-Based Rack | Up to 72 | |

| Equivalency | ||

| GPUs an ISS Array Could Power | ~200 GPUs | |

| ISS Arrays Needed for 100,000 GPUs | ~500 ISS-sized satellites | |

The Thermal Wall: Cooling in a Vacuum

A common misconception is that ‘space is cold, so cooling a data center there must be easy.’ This couldn’t be further from the truth. In the vacuum of space, there is no air or fluid to facilitate convection, the primary cooling mechanism on Earth. Instead, heat must be actively managed and dissipated through conduction and radiation alone. This means heat generated by components needs to be meticulously conducted away to large, often complex, radiator panels that then radiate that heat into the frigid depths of space. Consider the ISS’s Active Thermal Control System (ATCS): a marvel of engineering designed to dissipate just 16kW of heat, yet it requires massive surface areas. An AI data center, with its exponentially higher thermal loads, would demand radiator systems of truly gargantuan proportions, adding immense bulk and complexity to any orbital platform. The physics simply mandate it.

As one expert succinctly put it, countering the common assumption: “I’ve seen quite a few comments about this concept where people are saying things like, ‘Well, space is cold, so that will make cooling really easy, right?’ Um… No. Really, really no.”

Cosmic Rays & GPU Graveyards: Radiation Hardening

Beyond thermal management, the space environment poses another existential threat to commercial electronics: radiation. Cosmic rays and solar flares bombard satellites with charged particles, leading to devastating effects. Single-event upsets (SEUs) can flip bits, corrupting data without permanent damage. Worse are single-event latch-ups (SELs), which can cause short circuits and permanently burn out gates. Over time, total ionizing dose (TID) effects degrade chip performance, slowing transistors and increasing power consumption. Modern GPUs and high-bandwidth RAM, with their incredibly small geometry transistors and vast die areas, are particularly vulnerable; they simply aren’t designed for this relentless assault. While some shielding is possible, it’s often inadequate and heavy. True radiation hardening requires ‘Radiation Hardness By Design’ (RHBD), which fundamentally alters chip architecture, often at the cost of significant performance – turning a cutting-edge GPU into something akin to a 20-year-old processor.

The Bandwidth Bottleneck & Maintenance Nightmare

Even if we could power, cool, and protect these orbital data centers, two practical hurdles remain: data transfer and maintenance. Current reliable satellite communication typically hovers around 1Gbps. Compare this to terrestrial data centers, where 100Gbps+ rack-to-rack interconnects are standard, and the communication bottleneck becomes immediately apparent. This limited bandwidth would severely restrict the types of workloads that could be efficiently run in orbit. Furthermore, the idea of maintenance in space is, quite frankly, a nightmare. On Earth, a hot-swappable GPU or a quick server replacement takes minutes. In orbit, every repair is an immensely costly, time-consuming, and often impossible endeavor. The inherent high failure rates of complex electronics like GPUs, exacerbated by the harsh space environment, would mean a ‘disposable’ server farm – a concept utterly unsustainable given the astronomical launch costs per kilogram.

Orbital Data Centers: A Technical Trade-Off

Pros

- Access to near-constant solar energy.

- Natural vacuum for passive cooling environment (though heat rejection is complex).

- Enhanced physical security from terrestrial threats.

- Potential for new data sovereignty models.

- Low-latency processing for other space assets.

Cons

- Astronomical launch costs per kilogram.

- Extreme engineering challenges for power, cooling, and radiation hardening.

- High vulnerability of commercial hardware (GPUs, RAM) to space radiation.

- Limited communication bandwidth to Earth compared to fiber optics.

- Near-impossible in-situ maintenance and repair, leading to ‘disposable’ infrastructure.

- Risk of contributing to space debris (Kessler Syndrome).

From Lunar Vaults to ISS Nodes: Who’s Actually Building?

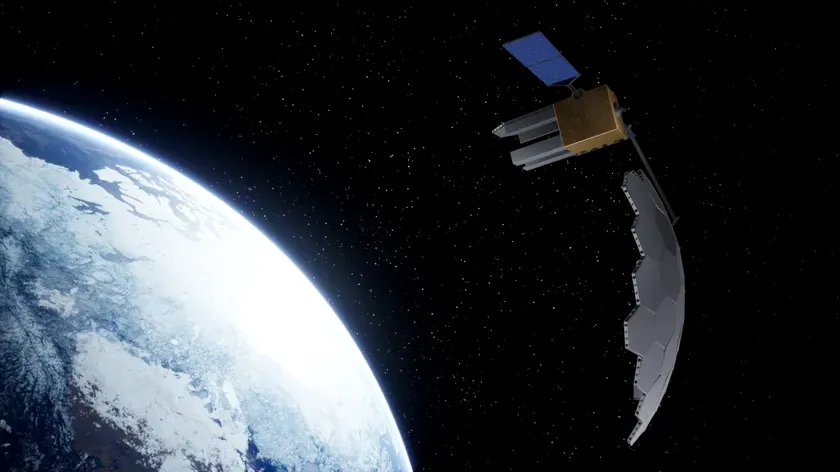

Despite the formidable gauntlet of engineering challenges, a handful of visionary companies and ambitious initiatives are actively pushing the boundaries to make space-based computing a reality. Their diverse approaches, from lunar data vaults to distributed LEO constellations, demonstrate a profound belief in the long-term potential of off-world infrastructure, even if the milestones achieved remain relatively small compared to the ultimate vision.

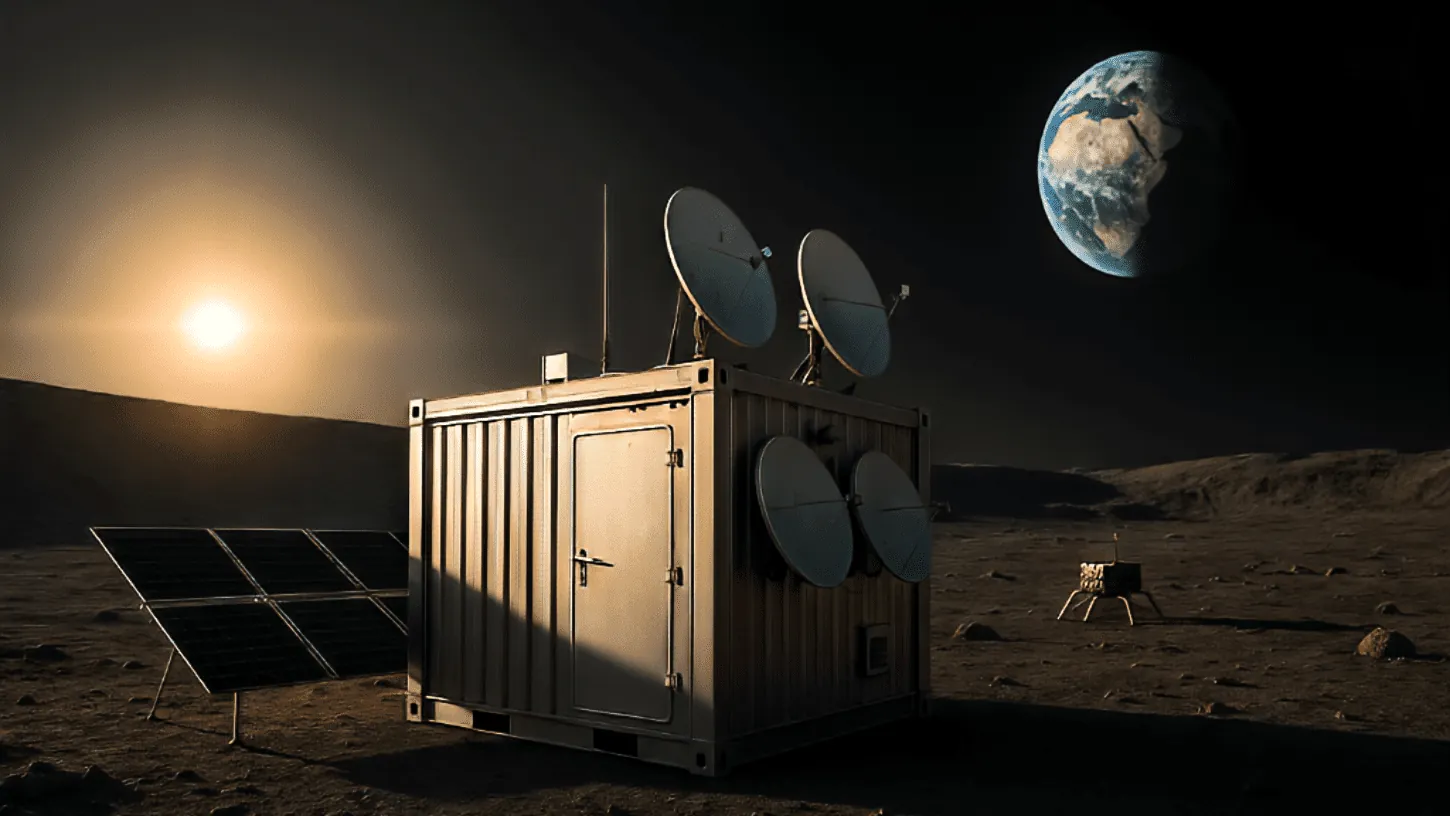

Lonestar Data Holdings: Archiving Humanity on the Moon

Lonestar Data Holdings is perhaps the most audacious player, envisioning secure, immutable data storage on the Moon, particularly around the Earth-Moon L1 Lagrange Point. Their ‘Independence’ payload recently made history, successfully transmitting the U.S. Declaration of Independence to the Moon and receiving copies of the Constitution and Bill of Rights back to Earth during Intuitive Machines’ IM-1 mission. This proof-of-concept, followed by plans for a physical data center on the IM-2 mission, is laying the groundwork for a ‘disaster recovery as a service’ model. Lonestar leverages the Moon’s unique geography, physical properties (like natural cooling in permanently shadowed craters), and even ‘space law’ to offer unparalleled data sovereignty and resilience against terrestrial vulnerabilities. Future plans include scaling to petabyte-level storage, with the first multi-petabyte unit slated for 2027, highlighting a long-term commitment to lunar data infrastructure.

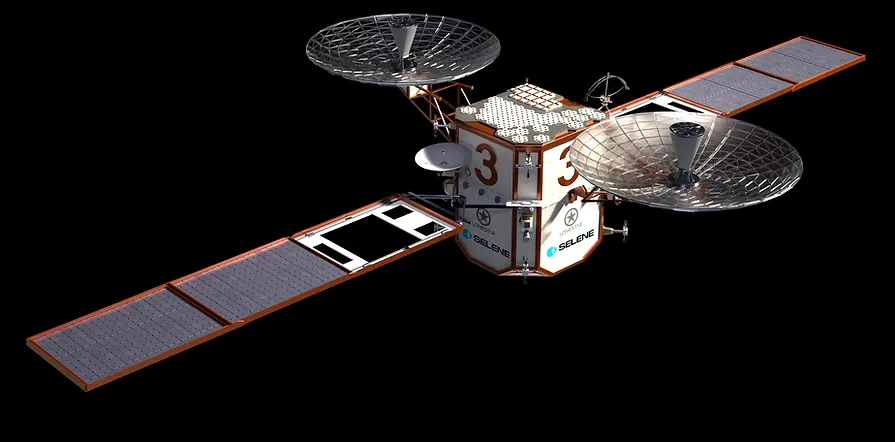

Axiom Space & Partners: LEO Edge Computing on the ISS

Closer to home, in Low Earth Orbit (LEO), Axiom Space is leading a collaboration with Spacebilt, Microchip Technology, Phison Electronics, and Skyloom to deploy orbital data center nodes (AxODC Node ISS) on the International Space Station by 2027. This initiative aims to significantly increase data storage, processing, and AI/ML capabilities directly in LEO. The primary purpose is to enable real-time data processing for Earth observation satellites and other space assets, drastically reducing the need for high-bandwidth downlinks to Earth. Axiom has already conducted tests with AWS Snowcone and their AxDCU-1, and their future nodes will leverage specialized ‘Pascari’ enterprise SSDs and radiation-hardened processors to ensure reliability in the harsh space environment. This federated network of nodes is designed to provide services to any satellite or spacecraft with compatible optical communication terminals, fostering an in-space edge computing ecosystem.

Google’s Project Suncatcher: AI TPUs in Tight Formation

Google’s ‘moonshot’ Project Suncatcher takes a distinct approach to orbital AI infrastructure. Their vision is to deploy solar-powered satellite constellations equipped with their custom Tensor Processing Units (TPUs) and free-space optical links for scalable machine learning compute. A key innovation addresses the bandwidth bottleneck: by flying satellites in tightly clustered formations – sometimes just kilometers apart – they aim to achieve data center-scale inter-satellite links. Surprisingly, Google’s research indicates their Trillium TPUs exhibit a notable degree of radiation tolerance for a five-year Low Earth Orbit mission life. With a partnership with Planet, Google plans to launch prototype satellites by early 2027 to validate these models and hardware in space, pushing the boundaries of in-orbit AI.

SpaceX & Starcloud: Distributed Grids vs. Centralized Platforms

The landscape of orbital computing is also seeing a divergence in architectural philosophy. SpaceX, leveraging its vast Starlink constellation, is pursuing a distributed approach. Their plan involves integrating compute capabilities directly into Starlink v3 satellites, creating a global ‘edge-compute layer’ that scales incrementally through mass production and reusable rockets. This model focuses on low-latency inference closer to the user. In contrast, Starcloud is developing a centralized model, designing large, power-dense orbital platforms with liquid-cooled GPUs and deployable radiators. Starcloud’s long-term goal is gigawatt-scale orbital clusters, with an economic justification rooted in comparing the lifetime terrestrial electricity costs to the upfront launch and solar deployment expenses. Both represent ambitious paths, but with fundamentally different scaling strategies and target workloads.

The Cost, The Critics, and the Kessler Syndrome

Beyond the engineering marvels and corporate ambitions, the cold, hard economic realities of orbital data centers remain a significant hurdle. Launch costs, even with optimistic projections for Starship, are still measured in thousands of dollars per kilogram. This alone makes deploying and refreshing massive computational infrastructure astronomically expensive. Add to this the need for specialized, radiation-hardened components – which are inherently more costly and often less powerful than their commercial counterparts – and the short lifespan of commercial hardware in space (requiring frequent, costly replacements every 5-7 years), and the business case for general-purpose computing becomes profoundly difficult. This stands in stark contrast to the rapidly expanding multi-trillion dollar terrestrial data center market, where economies of scale and accessibility drive down costs.

This economic impracticality, coupled with the maintenance nightmare, fuels much of the community’s skepticism. As one software developer aptly put it, “As a software developer who knows a thing or two about IT and data centers, this seems like a totally absurd idea, for all the reasons you’ve laid out plus the fact that you occasionally need someone to go in and do hardware maintenance.”

The public discourse, as captured in various online forums and comments, often veers into ‘Amusement’ and ‘Derision.’ Many view orbital computing as a ‘gimmick’ or a ‘shiny object,’ driven more by ‘FOMO and aesthetic futurism’ than by genuine practical necessity. Parallels are frequently drawn to past ‘outlandish’ tech ideas, such as submerged data centers, highlighting a skepticism that this is just another fleeting trend. Underlying some of this critique is a palpable distrust regarding corporate motives, with concerns that the move to space is, in part, an attempt to evade terrestrial regulations and accountability.

Beyond the Hype: The Pragmatic Path for Space-Based Computing

Moving beyond the grand visions and the harsh criticisms, a pragmatic path for space-based computing begins to emerge. It’s clear that orbital data centers are highly unlikely to replace terrestrial hyperscale facilities for bulk compute or training workloads in the foreseeable future. The fundamental constraints of physics, engineering, and economics simply don’t allow for it. Instead, their true value will lie in specialized, complementary roles. This includes providing critical ‘edge computing’ for the rapidly expanding ecosystem of space-based assets, offering ultra-secure disaster recovery solutions, enabling sovereign data storage beyond national borders, and potentially supporting future lunar or Martian outposts. The likely evolution is toward sophisticated hybrid space-terrestrial cloud architectures, where workloads are intelligently distributed to the environment best suited for their unique demands.

Emerging Trends & Future Directions:

- Hybrid Cloud Architectures: Seamless integration of orbital compute with terrestrial data centers, optimizing workloads for their respective environments.

- Distributed Mesh Networks: Constellations of interconnected, smaller orbital nodes for dynamic load balancing and fault tolerance.

- In-Orbit Manufacturing & Assembly (IOMA): Building and expanding data centers directly in space to reduce launch costs and enable larger structures.

- Advanced AI for Autonomy: Self-managing, self-healing systems with predictive maintenance capabilities to minimize human intervention.

- Quantum Computing Integration: Leveraging space’s unique environment for specialized quantum computing architectures.

- Interplanetary Networking: Laying the groundwork for communication and computation infrastructure for deep-space missions and colonies.

The Future Isn’t Just in the Cloud, It’s Beyond the Clouds – But Only for the Right Reasons.

Orbital data centers represent an audacious leap in computing infrastructure. While the vision of limitless power and cool efficiency is appealing, the cold, hard realities of physics and economics dictate a more nuanced future. They will not, in the foreseeable future, supplant the massive, efficient terrestrial data centers that power our daily lives. Instead, their true value will emerge in highly specialized applications: providing critical edge compute for an exploding space economy, offering an ultimate bastion for disaster recovery, and carving out unique niches for data sovereignty. For LoadSyn’s audience, the takeaway is clear: keep an eye on these ‘moonshots,’ but temper the hype with a healthy dose of engineering skepticism. The final frontier of computing is being built, but it’s a marathon of innovation, not a sprint to escape Earth’s gravity.

Orbital Data Centers: Your Questions Answered

Are orbital data centers economically feasible today?

Currently, no. The immense cost of launching hardware, the need for specialized radiation-hardened components, and the difficulty of maintenance make them significantly more expensive than terrestrial alternatives. Optimistic projections for launch costs still struggle to make them competitive for general-purpose computing.

How do you cool a data center in space without air?

In space, heat must be dissipated primarily through thermal radiation, using large radiator panels. Unlike on Earth, where air or liquid convection carries heat away, the vacuum of space requires complex engineering to conduct heat to these radiators, which then radiate it into the cold of space. It’s far from ‘free’ or ‘easy’ cooling.

What happens to regular computer chips in space?

Commercial computer chips are highly vulnerable to space radiation. They can experience Single Event Upsets (data corruption), Single Event Latch-ups (short circuits), and long-term degradation from Total Ionizing Dose. This significantly shortens their lifespan and compromises reliability, making them unsuitable for long-duration space missions without extensive (and performance-limiting) radiation hardening.

What is ‘data sovereignty’ in space?

Data sovereignty in space refers to the legal principle that data stored on a space-based module (like a lunar data center) may be governed by the laws of the launching state, rather than a specific terrestrial nation. This could offer a unique legal framework for sensitive data, providing an alternative to traditional geopolitical jurisdictions, though it also raises concerns about accountability.

Will orbital data centers replace Earth-based ones?

In the foreseeable future, it is highly unlikely. Orbital data centers are better suited for specialized ‘edge computing’ in space, disaster recovery, and sovereign data storage. Terrestrial data centers will remain dominant for bulk processing and AI training due to their massive scale, lower costs, and ease of maintenance.