AI’s Insatiable Hunger: The Memory Crisis Devouring PC Gaming

The computing landscape is undergoing a seismic shift, creating an ever-widening chasm between the burgeoning demands of artificial intelligence and the increasingly challenging reality for PC gamers. At the heart of this conflict lies High-Bandwidth Memory (HBM), a revolutionary technology that has become indispensable for AI accelerators. Its insatiable demand is now directly impacting the traditional component markets that gamers rely on, leading to severe supply constraints and skyrocketing prices for everything from DDR5 RAM to the GDDR6/6X powering our graphics cards, and even the NAND flash in our SSDs. This isn’t just a temporary market fluctuation; it’s a fundamental reordering of priorities, putting the future of affordable PC gaming hardware at significant risk.

Key Takeaways

- AI demand for High-Bandwidth Memory (HBM) is diverting critical wafer and packaging capacity away from consumer components.

- Memory manufacturers are prioritizing high-margin HBM, leading to severe supply constraints and skyrocketing prices for consumer DDR5, GDDR6/6X, and NAND.

- New fabrication facilities won’t alleviate the consumer market’s shortfall until at least 2027-2029, as their initial output will also prioritize enterprise AI products.

- Gamers are becoming an ‘afterthought’ in memory allocation, resulting in prolonged high prices, limited availability, and slower innovation for enthusiast PC hardware.

- The current market conditions are driving a sense of ‘generational scarcity’ and frustration within the PC gaming community, extending the relevance of older standards like DDR4.

HBM: The Brain Behind AI’s Boom (and Your PC’s Bust)

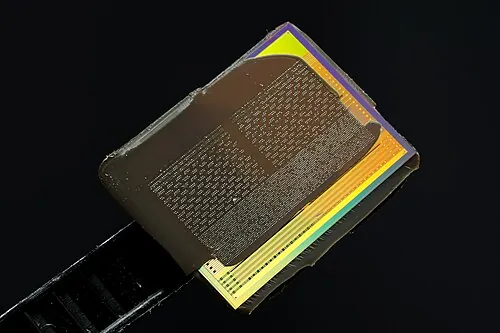

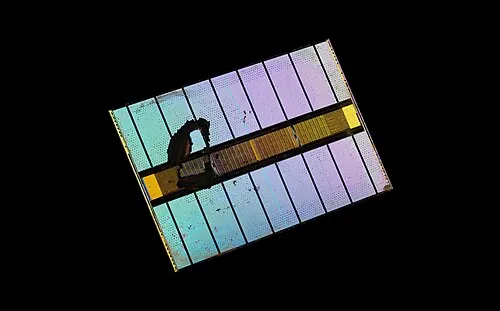

High-Bandwidth Memory (HBM) represents a paradigm shift in memory architecture, fundamentally different from the conventional DRAM we find in consumer PCs and GPUs. Unlike traditional planar DRAM, HBM utilizes an innovative 3D-stacking technology, where multiple memory dies are vertically interconnected using Through-Silicon Vias (TSVs) and microbumps. This intricate design allows for an exceptionally wide memory interface, typically 1024-bit or wider per stack, far surpassing the narrower 64-bit to 384-bit interfaces of DDR5 or GDDR6/6X. This architectural advantage translates into unprecedented bandwidth, with HBM3 offering over 819 GB/s and newer HBM3E pushing past 1.2 TB/s per stack. Such immense data throughput, combined with superior power efficiency due to shorter trace lengths, makes HBM indispensable for AI accelerators, which process colossal datasets at lightning speed. For AI, where every byte of data needs to be accessed and processed simultaneously, HBM isn’t just an upgrade; it’s the only viable solution, leaving conventional memory standards struggling to keep pace.

HBM vs. Traditional Memory: A Fundamental Divide

| Feature | HBM (HBM3/3E) | DDR5/GDDR6X (Consumer) |

|---|---|---|

| Primary Use Case | AI Accelerators, HPC, Supercomputers | Consumer PCs, Gaming GPUs |

| Architecture | 3D-stacked DRAM dies, wide interface (1024-2048 bit) | Horizontal planar DRAM, narrower interface (64-384 bit) |

| Typical Bandwidth (per stack/module) | ~819 GB/s to 1.2 TB/s+ | ~50-100 GB/s (DDR5), ~400-1000 GB/s (GDDR6X) |

| Power Efficiency | Very High (lower voltage, shorter traces) | Good, but less efficient for peak bandwidth vs. HBM |

| Packaging Complexity | Advanced (TSV, microbumps, interposer/CoWoS) | Standard (BGA, on-PCB placement) |

| Cost & Manufacturing Priority | Extremely High Margin, Top Priority | Lower Margin, Secondary Priority |

Key Players in the HBM Race:

- SK hynix: A true pioneer, SK hynix led HBM development and mass production, notably with HBM3 and HBM3E. They’re a key supplier to major AI GPU manufacturers like Nvidia and continue to innovate with 12-layer and 16-layer HBM3E stacks, leveraging advanced packaging like MR-MUF for enhanced thermal dissipation.

- Samsung: As a global DRAM powerhouse, Samsung has rapidly scaled its HBM production, with products like the HBM3E 12H ‘Shinebolt’. Their innovation extends to advanced packaging, utilizing thermal compression non-conductive film to achieve high-capacity stacks within existing form factors, directly challenging rivals.

- Micron: Actively engaged in developing and mass-producing HBM3E, Micron is focusing on delivering high performance, capacity, and power efficiency. Their offerings include 24GB 8-high cubes and upcoming 36GB 12-high cubes, positioning them as a critical player in the high-end memory market.

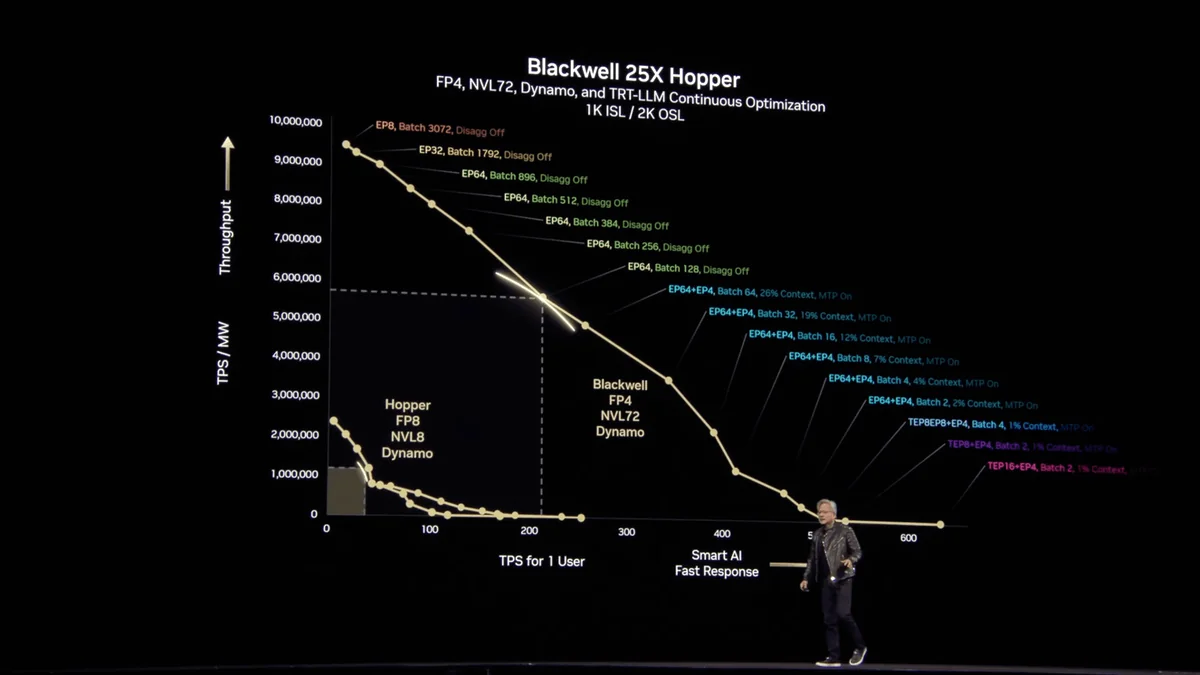

- Nvidia: The undisputed dominant force in AI GPUs, Nvidia drives much of the HBM demand for its cutting-edge Hopper and Blackwell architectures. These AI accelerators are fundamentally reliant on HBM to deliver their groundbreaking performance, making Nvidia a primary catalyst for the HBM market’s growth.

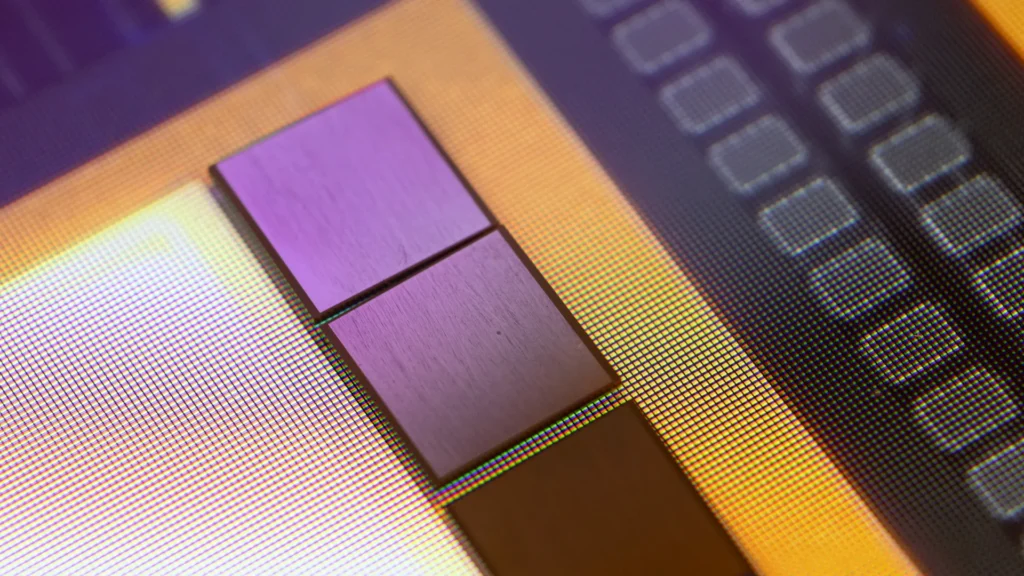

- TSMC: This crucial foundry partner is not just responsible for producing the base dies for HBM, but also for critical advanced packaging technologies like CoWoS (Chip-on-Wafer-on-Substrate). TSMC’s capabilities are a vital, and often bottlenecked, component of the complex HBM manufacturing ecosystem.

The Industrial Choke Point: Wafer Capacity, Packaging, and Power

The complex construction of HBM, particularly its 3D stacking and reliance on Through-Silicon Vias (TSVs), consumes significantly more silicon wafer capacity and advanced packaging resources than conventional memory. Unlike traditional planar DRAM, HBM’s vertical integration requires highly specialized processes and materials, making it inherently more resource-intensive. Advanced packaging technologies such as TSMC’s CoWoS (Chip-on-Wafer-on-Substrate) and SK hynix’s Advanced Mass Reflow Molded Underfill (MR-MUF) are now critical bottlenecks. These processes are not only incredibly intricate and time-consuming but also require dedicated, state-of-the-art facilities, diverting precious manufacturing capacity away from consumer-grade components. This industrial choke point means that even if a memory manufacturer has ample raw silicon, the specialized steps required for HBM create a profound scarcity across the entire memory supply chain.

“I think the biggest issue we are now having is not a compute glut, but it’s power — it’s sort of the ability to get the builds done fast enough close to power. So, if you can’t do that, you may actually have a bunch of chips sitting in inventory that I can’t plug in. In fact, that is my problem today. It’s not a supply issue of chips; it’s actually the fact that I don’t have warm shells to plug into.”

— Satya Nadella, Microsoft CEO

These constraints are not fleeting; they are deeply systemic and long-term. Establishing new fabrication facilities (fabs) demands multi-billion dollar investments and years of lead time, with significant new capacity not expected to come online until 2027 at the earliest, and likely extending into 2029. Even when these new fabs begin production, their output will be heavily skewed towards high-margin HBM and lucrative enterprise contracts, leaving the consumer markets in a sustained state of shortfall. Compounding this issue, as Microsoft CEO Satya Nadella highlighted, is the escalating demand for electrical power to run massive AI data centers. This energy bottleneck further intensifies the competition for resources, as the sheer scale of AI infrastructure development strains global energy grids, indirectly impacting the allocation of memory components.

The Gamer’s Dilemma: Soaring Prices and Second-Tier Status

For PC gamers, the consequences of this industrial reorientation are stark and immediate: skyrocketing prices and severely limited availability for crucial components. Consumer-grade DDR5 RAM modules, the GDDR6/6X memory essential for modern graphics cards, and even high-performance NAND storage for SSDs have all seen significant price hikes and reduced stock. Memory manufacturers, driven by the immense profitability of HBM for enterprise AI, are strategically prioritizing these high-margin contracts. This effectively relegates the consumer market to an ‘afterthought’ in their memory allocation strategies. It’s not an accidental shortage; it’s a deliberate business decision that views the gaming community as a secondary priority compared to the multi-billion dollar AI sector. This stark reality means gamers are paying more for less, facing unprecedented challenges in building or upgrading their systems.

Community Voices: ‘The AI Bubble Will Burst Sooner Than I Thought…’

The PC gaming community is grappling with a potent mix of anger, desperation, and gallows humor. Comments like ‘The same ram I bought last year costs $100 more now 😢’ perfectly encapsulate the fury over inflated prices. Others resort to dark sarcasm, declaring, ‘We building a PC in 2137 Bois 🗣️🔥’, reflecting a deep-seated frustration and the feeling of generational scarcity. This collective sentiment highlights a profound skepticism that manufacturers will soon re-prioritize consumer value. Many are now openly hoping for an ‘AI bubble burst’ to restore some semblance of market sanity, even if the timeline for such a correction remains uncertain.

This prioritization doesn’t just impact pricing and availability; it also casts a long shadow over the pace of innovation and the widespread adoption of new standards in consumer hardware. When critical resources are funneled elsewhere, research and development for gamer-centric technologies inevitably slow, and the path for new memory standards to reach mass-market affordability becomes significantly longer. Consequently, older, more established standards like DDR4 are not merely holding their ground; their prolonged relevance becomes a necessary economic reality for a vast segment of the PC gaming community, pushing the bleeding edge further out of reach for many.

The Road Ahead: Projections and Managing Expectations

The sobering reality is that the memory supply shortage and the accompanying high prices for consumer components are not expected to abate anytime soon. Industry projections indicate that these conditions will likely persist until at least 2027, with some forecasts extending into 2029. This extended timeline is rooted in several critical factors: the multi-year lead time and immense capital investment required to bring new fabrication facilities online, and the relentless, exponential growth of AI. Even as new fabs eventually come into production, their initial output will almost certainly be absorbed by the high-margin enterprise AI sector, ensuring that consumer markets remain in a sustained state of scarcity. Gamers must manage expectations for significant market corrections, as the foundational dynamics of semiconductor manufacturing have fundamentally shifted.

Projected Consumer Memory Price & Availability Trends (2024-2029)

This chart illustrates a conceptual projection based on industry expert forecasts. Actual market conditions may vary. Price Index: 1.0 = pre-AI boom average. Availability Index: 1.0 = pre-AI boom normal supply.

The New Era: AI Reigns, Gamers Adapt

The intelligence points to a stark reality: the era of readily available, affordably priced, cutting-edge consumer PC components is on an extended hiatus. AI’s demand for HBM is not a fleeting trend but a foundational shift in semiconductor manufacturing priorities. Gamers must brace for a market where premium memory and GPUs are increasingly luxury items, and strategic purchasing (or holding onto older tech) becomes paramount. The dream of a budget-friendly, bleeding-edge PC build will remain just that for the foreseeable future, making adaptation and informed choices more critical than ever.

Frequently Asked Questions

HBM is a type of 3D-stacked RAM that offers significantly higher bandwidth and better power efficiency compared to traditional DDR or GDDR memory. It achieves this by vertically stacking multiple memory dies with Through-Silicon Vias (TSVs), creating an ultra-wide interface (e.g., 1024-bit). AI accelerators, especially those used for training large language models, require immense data throughput to process colossal datasets, making HBM indispensable for their performance.

HBM production consumes a disproportionately large share of advanced wafer capacity and highly specialized packaging resources. As AI demand surges, memory manufacturers prioritize high-margin HBM contracts, diverting these critical resources away from consumer-grade DDR5 DRAM, GDDR6/6X for graphics cards, and NAND flash. This leads to reduced supply and higher prices for gamers.

Industry projections suggest that constraints will persist until at least 2027-2029. This is due to the long lead times for new fabrication facilities to come online and the initial output of those facilities being heavily prioritized for high-margin enterprise AI products.

Given the long-term projections, waiting several years might be necessary for ‘normal’ pricing. If you need a PC now, consider optimizing around current-generation or slightly older components (like DDR4) or mid-range GPUs to manage costs.

While not ignored, the consumer market is now a secondary priority. The profit margins for HBM in AI are significantly higher, leading to a shift in manufacturing focus that prioritizes enterprise customers over the gaming community.