The Death of Micromanagement: Enter CUDA Tile

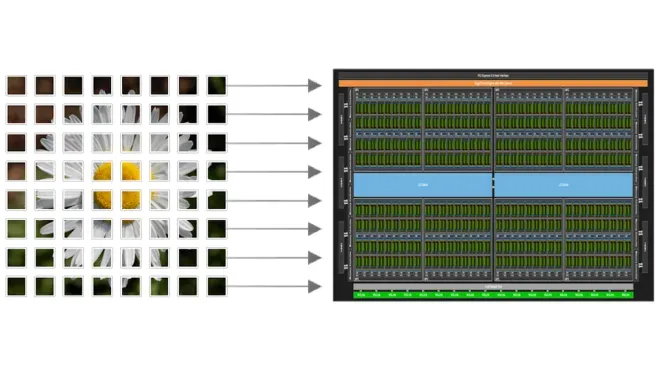

For decades, GPU programming has been synonymous with the SIMT (Single Instruction, Multiple Thread) model. It gave developers total control, but at a cost: the mental overhead of managing every individual thread, block, and memory coalescing detail. This manual orchestration was the price of performance, requiring an intimate knowledge of the underlying silicon that few possessed. However, with the release of CUDA 13.1, NVIDIA is introducing a paradigm shift. CUDA Tile moves the abstraction layer up, allowing developers to work with ’tiles’—logical subsets of arrays—while the compiler handles the messy hardware mapping to Tensor Cores and shared memory. It is, quite simply, the end of the era where developers had to act as air traffic controllers for thousands of concurrent threads.

Key Takeaways

- CUDA Tile abstracts hardware details like Tensor Cores and TMA.

- cuTile Python brings this high-performance model to the world’s most popular AI language.

- Requires CUDA 13.1, Driver R580+, and Blackwell-generation hardware (initially).

- Designed to compete with and potentially displace DSLs like OpenAI’s Triton.

cuTile Python: The Bridge to AI Efficiency

The real magic happens in cuTile Python. By using the @ct.kernel decorator, Python developers can now author kernels that rival the performance of hand-tuned CUDA C++, without ever leaving their preferred environment. This isn’t just a wrapper or a simplified interface; it’s a Domain Specific Language (DSL) that generates Tile IR, a new intermediate representation that acts as a virtual ISA for tile-based operations. By centering the programming model around Tiles—immutable values with compile-time constant shapes—NVIDIA has created a system where the compiler can automatically handle asynchrony and data movement via Tensor Memory Accelerators (TMA), ensuring that the code remains portable even as the underlying hardware architecture evolves.

The Hardware Gating: Blackwell and Beyond

Currently, the tileiras compiler is optimized for Blackwell-generation GPUs (Compute Capability 10.x/12.x). While NVIDIA has signaled future expansion, RTX 40-series (Ada Lovelace) users may have to wait for broader support. This hardware lock has created a distinct sense of FOMO among enthusiasts holding current-gen flagships, but it underscores NVIDIA’s commitment to ensuring their latest silicon is utilized to its absolute theoretical limit from day one.

cuTile vs. Traditional SIMT

| Feature | Traditional SIMT | CUDA Tile (cuTile) |

|---|---|---|

| Abstraction Level | Low (Thread-centric) | High (Data-centric) |

| Memory Management | Manual (Coalescing, Shared) | Automated (via Compiler) |

| Portability | Requires Manual Tuning | Architecture Agnostic IR |

| Learning Curve | Steep (Requires C++/CUDA) | Moderate (Python-based) |

The ‘RIP Triton’ Moment?

“RIP triton”

— Community Sentiment

The industry is already drawing battle lines. By launching cuTile, NVIDIA is directly addressing the space currently occupied by OpenAI’s Triton. While Triton has been the darling of the AI world for its simplicity and block-based approach, cuTile offers a more native, integrated path within the CUDA ecosystem. For NVIDIA, this is about reclaiming the software stack and ensuring that the most efficient way to run AI on Blackwell is through their own first-party tools. By offering a Pythonic interface that generates Tile IR, they are essentially providing the performance of a specialized HPC library with the ease of use found in NumPy or JAX. The message to the community is clear: the hardware and the language are now merging into a single, cohesive unit.

Final Verdict

CUDA Tile and cuTile Python represent the most significant shift in NVIDIA’s programming philosophy since the original CUDA launch. It democratizes high-performance GPU programming, though its current hardware-locked nature means its full impact won’t be felt until Blackwell becomes the industry standard. For those working at the bleeding edge of AI, the era of micromanaging threads is over; the era of the Tile has begun.