- The RTX 3060’s generous 12GB VRAM proved to be its unexpected secret weapon, providing it with remarkable longevity compared to its 8GB contemporaries and even newer, less-equipped successors.

- Today’s most demanding games, coupled with the explosion of local AI and Large Language Model (LLM) workloads, are ravenous for VRAM, rendering 8GB GPUs a questionable short-term investment for serious users.

- Nvidia’s continued adherence to 8GB VRAM and a narrow 128-bit memory bus in its mainstream RTX 4060 and the rumored RTX 5060 models is sparking significant concern regarding their long-term value and future-proofing capabilities.

- Savvy competitors, including Intel with its Arc B580 and AMD’s latest Radeon offerings, are strategically gaining market share by prioritizing and delivering higher VRAM capacities in the crucial mid-range segment.

- Beyond raw computational power, a GPU’s VRAM capacity and memory bus width are now arguably the most critical specifications determining its future relevance and ability to handle evolving software demands.

The Unsung Hero’s Curtain Call: Why the RTX 3060 Endured

The NVIDIA GeForce RTX 3060 is finally taking its bow, concluding an unexpectedly long and impressive run of nearly five years. Upon its debut, the card’s 12GB of GDDR6 VRAM was often considered an excessive allocation for a mid-range GPU. Fast forward to 2025, however, and this seemingly generous VRAM buffer has emerged as the RTX 3060’s most prescient feature. Its successors, by contrast, are now struggling to justify their comparatively meager memory configurations. This article will thoroughly dissect how a single, standout specification elevated a competent mid-range GPU into an enduring benchmark for long-term value and future-proofing. We’ll explore why its impending retirement marks a significant void in the market, particularly for PC gamers and the rapidly expanding community of local AI developers.

A Deep Dive into the RTX 3060’s Architecture and Key Specifications

| Graphic Engine | NVIDIA® GeForce RTX™ 3060 |

|---|---|

| Bus Standard | PCI Express 4.0 |

| Video Memory | 12GB GDDR6 |

| Memory Speed | 15 Gbps |

| Memory Interface | 192-bit |

| CUDA Cores | 3584 |

| Boost Clock | 1777 MHz |

| Memory Bandwidth | 360 GB/sec |

| TDP | 170 W |

| Launch MSRP | $329 USD (Feb 2021) |

Launched with the Ampere GA106 GPU at its core, the RTX 3060 arrived featuring 3584 CUDA cores, a robust 192-bit memory interface, and a 170W Thermal Design Power (TDP). While its raw rasterization performance was certainly commendable for its $329 MSRP, it was the substantial 12GB of GDDR6 memory that truly distinguished it. This VRAM allocation was a notable outlier in NVIDIA’s product stack at the time, remarkably surpassing even its higher-tier siblings like the 3060 Ti, 3070, and 3080 in raw memory capacity. This decision, whether a calculated strategic move to counter AMD’s generous VRAM offerings or a fortunate consequence of optimizing for the 192-bit bus, inadvertently became the card’s most pivotal feature, granting it a longevity that few could have predicted.

The VRAM Prophecy: Why 12GB Became Indispensable

“I’d get the 12GB B580 because problem is 8GB GPUs even at 1080p can get all 8 gigs of VRAM fully saturated, not ideal for using for a few years down the road…”

— Fandom Pulse Commentary

Indeed, fast forward to 2025, and the gaming landscape has undergone a dramatic transformation. The relentless pursuit of visual fidelity, intricate ray tracing effects, and vast, highly detailed open-world environments are collectively pushing Graphics Processing Units to their absolute memory thresholds. Titles that once performed adequately on 8GB cards now frequently encounter crippling stutters and precipitous frame rate drops as their VRAM becomes saturated, an issue that can plague even 1080p gameplay. The RTX 3060’s 12GB buffer, however, provided that essential headroom. This foresight enabled it to navigate these escalating demands with far greater grace and stability than its 8GB rivals, solidifying its position as a remarkably reliable and long-lasting choice for gamers.

This video directly addresses the 3060’s performance in current games, reinforcing the narrative.

Nvidia’s Successor Paradox: The RTX 4060 and RTX 5060’s VRAM Dilemma

The introduction of the RTX 4060 in June 2023, priced at $299, was met with considerable community backlash, a sentiment echoed across forums and reviews. The primary points of contention were its 8GB VRAM configuration and a notably narrower 128-bit memory bus. While the card did bring advancements in power efficiency and the impressive DLSS 3 Frame Generation technology, its VRAM capacity felt like a distinct step backward, particularly when juxtaposed against its 12GB predecessor. This concerning trend appears poised to continue with the rumored RTX 5060, which is also expected to feature a 128-bit bus. This prospect has reignited widespread fears among enthusiasts regarding insufficient memory headroom for the next wave of demanding game titles and burgeoning AI applications. This perceived VRAM ‘regression‘ from Nvidia has, paradoxically, only served to solidify the RTX 3060’s prescient legacy.

| GPU Model | VRAM (GB) | Memory Interface (bits) | Memory Bandwidth (GB/s) |

|---|---|---|---|

| RTX 3060 (12GB) | 12 GB GDDR6 | 192-bit | 360 |

| RTX 4060 (8GB) | 8 GB GDDR6 | 128-bit | 272 |

| RTX 5060 (Rumored) | 8 GB GDDR6 | 128-bit | ~272 (estimated) |

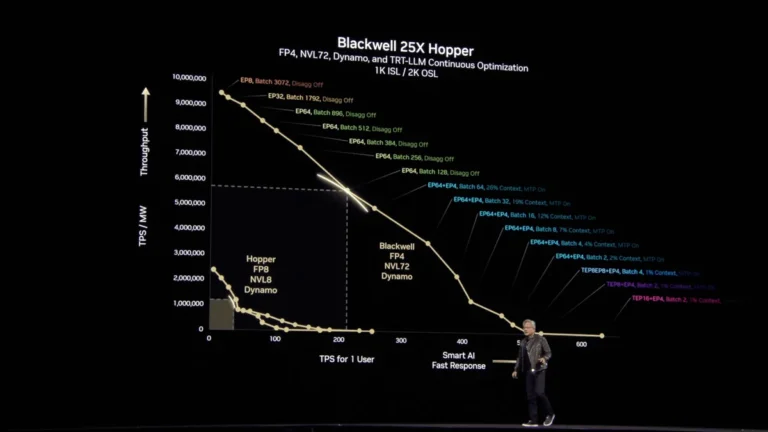

In the world of high-performance computing, raw TFLOPs and innovative features like DLSS 3 are undoubtedly appealing. However, an 8GB VRAM buffer, regardless of a GPU’s theoretical processing power, often represents a hard, immovable limit. Once this memory is saturated, users experience jarring stutters, noticeable texture pop-in, and degraded visual quality that even the most sophisticated upscaling technologies cannot fully mitigate. For today’s graphically intensive games, and perhaps even more critically for emerging local AI and LLM workloads, VRAM capacity frequently serves as the ultimate arbiter, determining not just *how well* a game plays, but whether an application or model can even be loaded and run effectively.

The AI Revolution: Unexpected Demand for the RTX 3060

Beyond its prowess in gaming, the burgeoning fields of Large Language Models (LLMs) and local AI development have unexpectedly fueled a significant resurgence in demand for the RTX 3060. Aspiring coding students, eager enthusiasts, and even seasoned developers looking to run smaller LLM models or experiment with local AI image generation quickly converged on one critical realization: VRAM capacity is paramount. Many popular models, even when subjected to aggressive quantization techniques to reduce their footprint, still necessitate 12GB or more of VRAM to operate efficiently without the detrimental performance penalty of offloading data to much slower system RAM. In this emerging landscape, the 3060 rapidly cemented its status as the de facto budget champion, showcasing a versatility that extended far beyond its initial gaming-centric brief.

- 7B Parameter Models (FP16): Approximately 14GB of VRAM for inference, with fine-tuning (LoRA/QLoRA) typically demanding between 24GB and 40GB.

- 13B Parameter Models (FP16): Requires around 26GB for inference, while fine-tuning (LoRA/QLoRA) often pushes requirements to 40GB or more.

- Stable Diffusion (FP16): Inference generally needs 5-6GB of VRAM, but training a model (with a batch size of 1) escalates this to approximately 16GB.

- Quantization Impact: Aggressive quantization techniques (e.g., 4-bit) can significantly reduce VRAM requirements, sometimes enabling 7B models to run on 8GB cards, though this often comes with trade-offs in performance or accuracy.

The Rise of the VRAM Vanguard: AMD & Intel’s Counter-Play

As Nvidia appears to have deliberately curtailed VRAM allocations for its crucial mainstream ’60’ series, astute competitors are actively seizing the opportunity. Both AMD and Intel are strategically positioning their mid-range GPU offerings with significantly more generous VRAM capacities, directly confronting a primary concern within the PC gaming and burgeoning AI communities. This proactive approach by rival manufacturers is making their products increasingly attractive to users who feel underserved by Nvidia’s current memory strategies.

Intel’s Arc B580, powered by the efficient Battlemage architecture, offers a substantial 12GB of GDDR6 VRAM on a 192-bit bus. It frequently undercuts the RTX 4060 in price while delivering comparable performance.

Designed with a generous 16GB GDDR6 VRAM buffer, providing extensive headroom for future AAA games and highly demanding AI workloads.

While drivers have improved, they still benefit from Resizable BAR and may encounter quirks in older titles compared to established competitors.

Likely to command a higher price point than current entry-level cards, and initial availability can be inconsistent at launch.

“Forget to mention the arc b580, that thing has gotten SO much better than when it released, 12gb vram, and performs similar if not better the 4060 lol”

— Fandom Pulse Commentary

The Science of GPU Memory: VRAM, Bus Width, and Performance

To truly grasp why VRAM capacity and its underlying interface are so critically important, we must delve beneath the surface-level specifications. It’s fundamentally not just about the raw gigabytes; rather, the memory bus width and the effective memory speed (measured in Gbps) collectively dictate how efficiently the GPU can access and process data. A wider memory bus, such as the RTX 3060’s 192-bit interface, enables a significantly larger volume of data to be transferred simultaneously. Concurrently, faster memory speeds reduce latency, allowing the GPU to retrieve information more quickly. The critical issue arises when VRAM becomes saturated: the GPU is then forced to offload data to the much slower system RAM via the PCIe interface. This process introduces severe performance penalties, manifesting as stutters and drastically reduced frame rates, even if the system is equipped with a high-end CPU.

Intel’s innovative Battlemage architecture, as seen in the Arc B580, offers a compelling example of how intelligent memory subsystem and cache optimizations can maximize VRAM utility. Despite some models featuring a slightly narrower PCIe 4.0 x8 link, Battlemage’s larger 18MB L2 cache and efficient data pathways are designed to make the most of its 12GB GDDR6 VRAM. This strategic design highlights a crucial point: while architectural efficiency can certainly mitigate some bandwidth limitations, sheer VRAM capacity remains indispensable for handling the ever-growing size of game assets and complex AI models. In stark contrast, NVIDIA’s recent approach with smaller L2 caches and narrower memory bus widths on its mainstream ’60’ series cards, especially when compared to the RTX 3060’s more balanced and generous design, continues to be a significant point of contention for many discerning users.

The Future of Mid-Range GPUs: VRAM as the New Battleground

The enduring legacy of the RTX 3060 has irrevocably reshaped community expectations for mainstream graphics cards. The deeply ingrained perception now is that a ’60’ series GPU must deliver a judicious balance of raw performance and long-term future-proofing, a balance undeniably dictated by its VRAM configuration. Looking ahead, any manufacturer failing to equip their mainstream offerings with sufficient memory capacity risks alienating a substantial segment of the market. This is especially true as local AI workloads and LLM development continue their rapid proliferation on personal machines. The critical question for consumers has definitively evolved; it’s no longer solely ‘how fast?’ but, with increasing urgency, ‘how much memory?’

The NVIDIA GeForce RTX 3060 (12GB) concludes its production cycle, leaving behind a legacy far more profound than its initial mid-range positioning ever suggested. It inadvertently served as a technological prophet, unequivocally demonstrating the critical, often underestimated, importance of VRAM for a GPU’s long-term relevance in an accelerating technological landscape. As subsequent generations from its own manufacturer grapple with perceived memory limitations and consumer skepticism, the RTX 3060’s generous 12GB VRAM and well-balanced memory subsystem stand as a powerful testament to either remarkable foresight or an incredibly fortunate happenstance. This benchmark of memory capacity is a standard that future GPUs, irrespective of brand, must strive to emulate if they are to genuinely cater to the evolving demands of both passionate gamers and the rapidly expanding cohort of AI developers. Its departure signifies not merely the conclusion of a product cycle, but a definitive paradigm shift in what truly defines a ‘future-proof’ graphics card.